Generated videos of varied aspect ratios and styles

MotionAura: Generating High-Quality and Motion Consistent Videos using Discrete Diffusion

Abstract

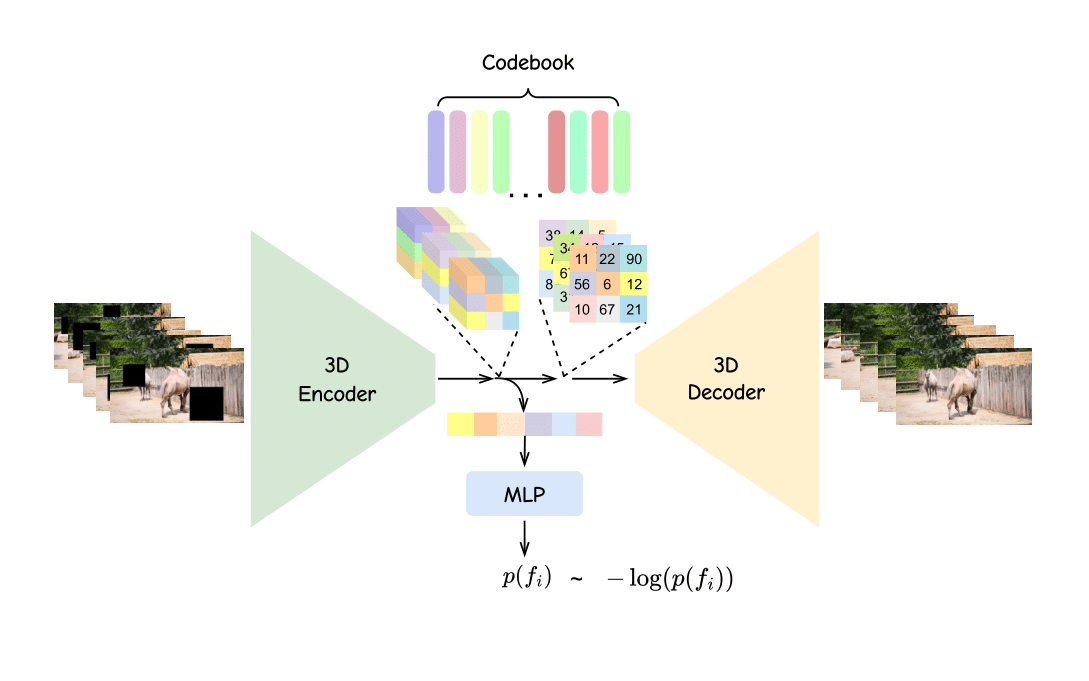

The spatio-temporal complexity of video data presents significant challenges in tasks such as compression, generation, and inpainting. We present four key contributions to address the challenges of spatiotemporal video processing. First, we introduce the 3D Mobile Inverted Vector-Quantization Variational Autoencoder (3D-MBQ-VAE), which combines Variational Autoencoders (VAEs) with masked token modeling to enhance spatiotemporal video compression. The model achieves superior temporal consistency and state-of-the-art (SOTA) reconstruction quality by employing a novel training strategy with full frame masking. Second, we present MotionAura, a text-to-video generation framework that utilizes vector-quantized diffusion models to discretize the latent space and capture complex motion dynamics, producing temporally coherent videos aligned with text prompts. Third, we propose a spectral transformer-based denoising network that processes video data in the frequency domain using the Fourier Transform. This method effectively captures global context and long-range dependencies for high-quality video generation and denoising. Lastly, we introduce a downstream task of Sketch Guided Video Inpainting. This task leverages Low-Rank Adaptation (LoRA) for parameter-efficient fine-tuning. Our models achieve SOTA performance on a range of benchmarks. Our work offers robust frameworks for spatiotemporal modeling and user-driven video content manipulation. We will release the code, datasets, and models in open-source.

Video Reconstruction, Generation and Sketch based Video Inpainting

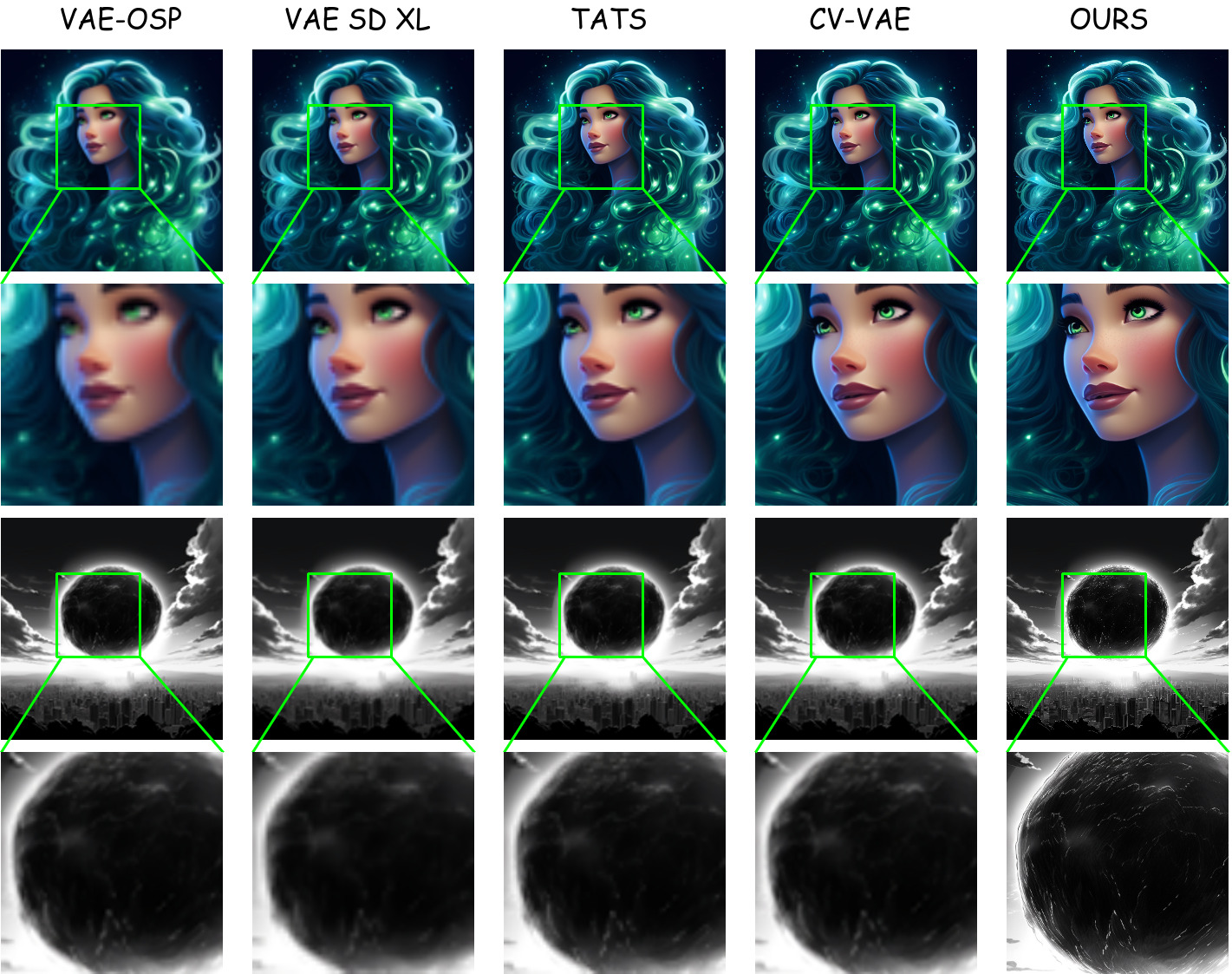

Qualitative analysis of image reconstruction using different Video VAEs

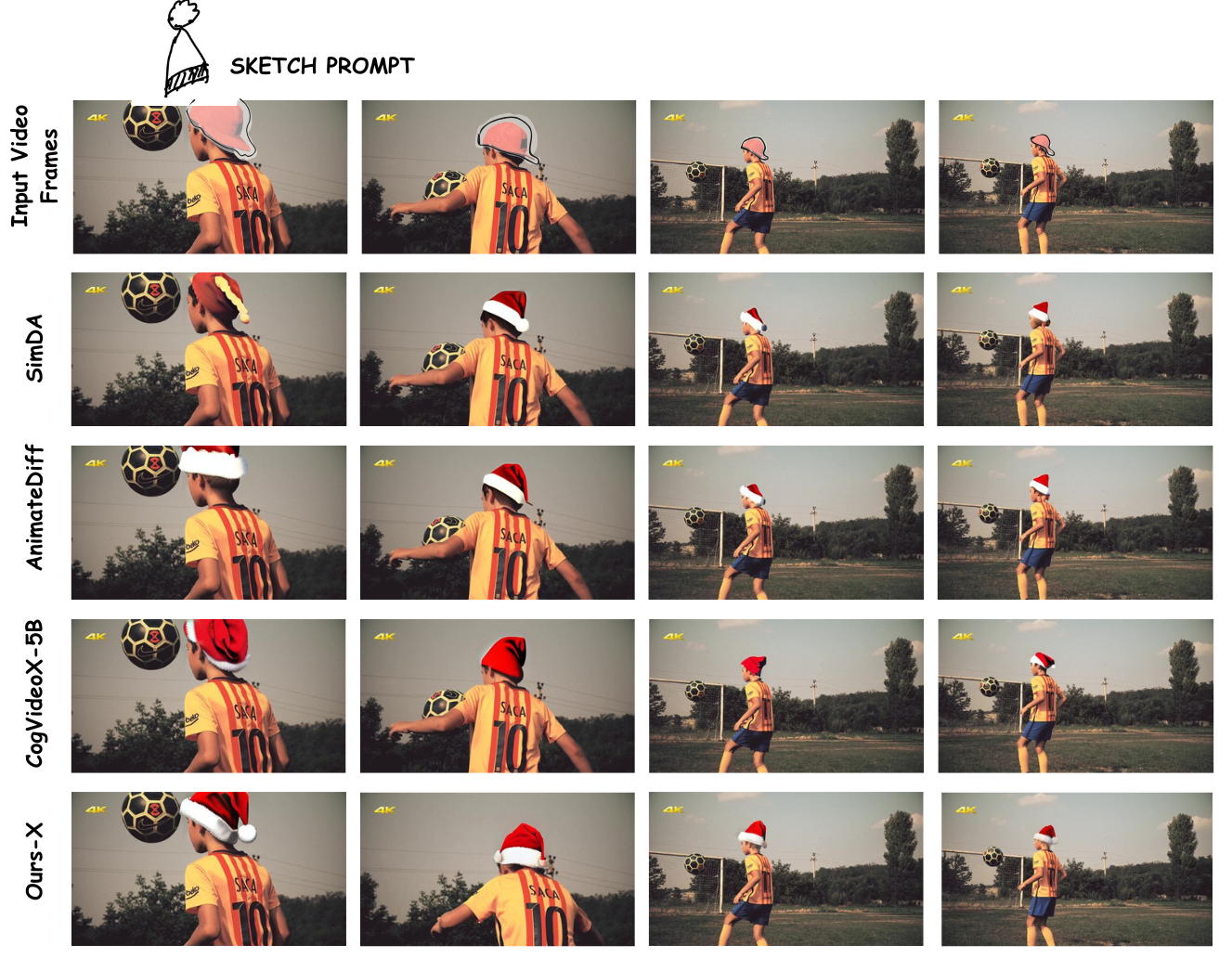

Qualitative analysis of sketch-based image inpainting

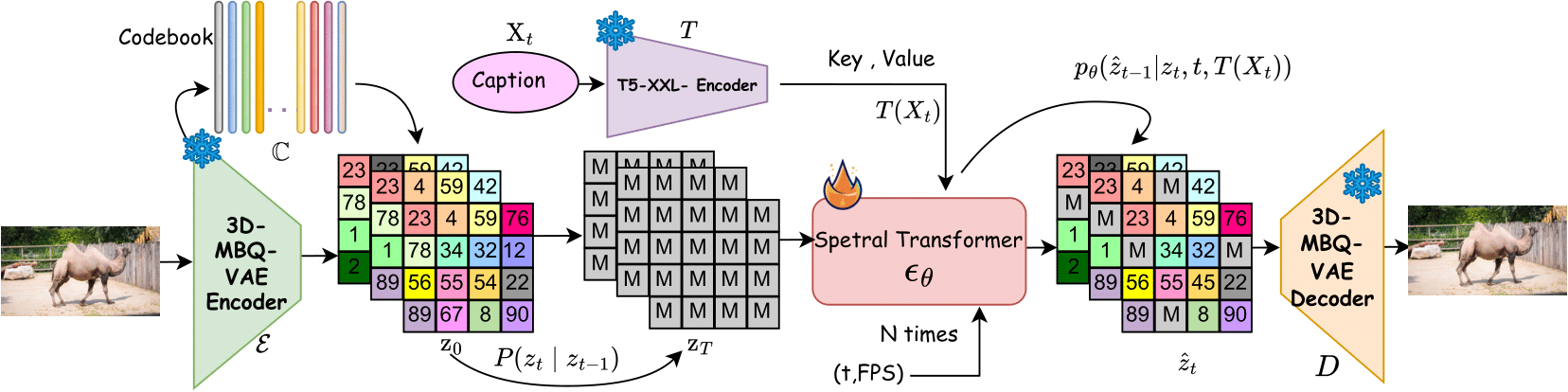

Discrete diffusion pretraining of the spectral transformer involves processing tokenized video frame representations from the 3D-MBQ-VAE encoder. These representations are subjected to random masking based on a predefined probability distribution. The resulting corrupted tokens are then denoised through a series of N Spectral Transformers. Contextual information from text representations generated by the T5-XXL-Encoder aids in this process. The denoised tokens are reconstructed using the 3D decoder

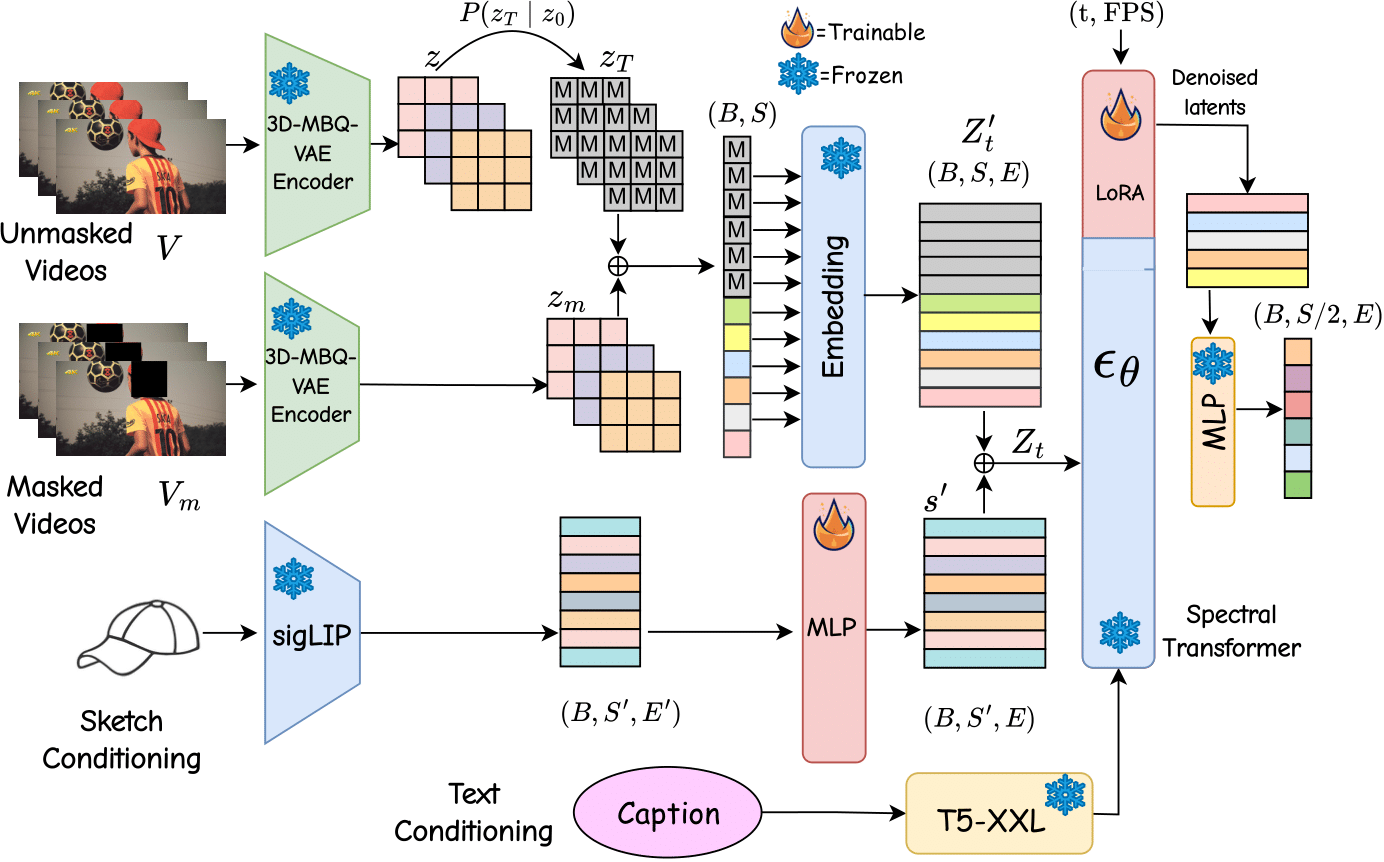

Sketch-guided video inpainting process. The network inputs masked video latents, fully diffused unmasked latent, sketch condi- tioning, and text conditioning. It predicts the denoised latents using LoRA infused in our pre-trained denoiser ϵθ

Our proposed MotionAura architecture consists of a 3D Mobile Inverted Vector-Quantization VAE (3D-MBQ-VAE) for efficient video tokenization and a discrete diffusion model to generate high-quality, motion-consistent videos. By leveraging auxilary discriminative loss for predicting masked frames and enforcing random fram masking, the method enforces both learning efficient spatio-temporal features.